Updated on:

Written by:

Persistent Memory vs RAM in 2025: CXL & NVDIMM-P Guide

- Intro – The Memory Gap in 2025

- 1. What Is Persistent Memory?

- 2. Persistent Memory vs RAM – Key Differences

- 3. Optane Is Gone – Now What?

- 4. Two Next-Gen Alternatives

- 5. NVDIMM-P vs CXL – When to Use Which

- 5. Real-World Workloads Benefiting from PMEM

- 6. Total Cost of Ownership (TCO) Modeling

- 7. The Road Ahead (2026–2028)

- FAQ: Persistent Memory

- Conclusion: Persistent Memory Is No Longer Optional

Key Takeaways

- Persistent memory fills the gap between fast but expensive DRAM and large but slow SSDs—delivering ~120–500 ns latency with lower cost per GB.

- NVDIMM-P connects via the DDR5 memory bus and offers low-latency, socket-local persistence—ideal for in-memory databases and fast-restart apps.

- CXL Type-3 memory uses the PCIe interface to provide composable memory pooling across hosts—great for scalable AI, edge, and DPU-powered systems.

- Intel Optane is discontinued, so forward-looking deployments should focus on NVDIMM-P or CXL-based solutions for persistent memory needs.

- DRAM and PMEM can be mixed using Memory Mode or App Direct Mode, depending on OS and platform support (e.g., Intel Granite Rapids, AMD Turin).

- PMEM adoption will accelerate from 2025 to 2028, with innovations like MR-DIMM, CXL 3.0 fabrics, and HBM-PIM driving the next wave of memory architecture.

Intro – The Memory Gap in 2025

Why Persistent Memory Is Back in the Spotlight

Modern servers are fast. But not fast enough.

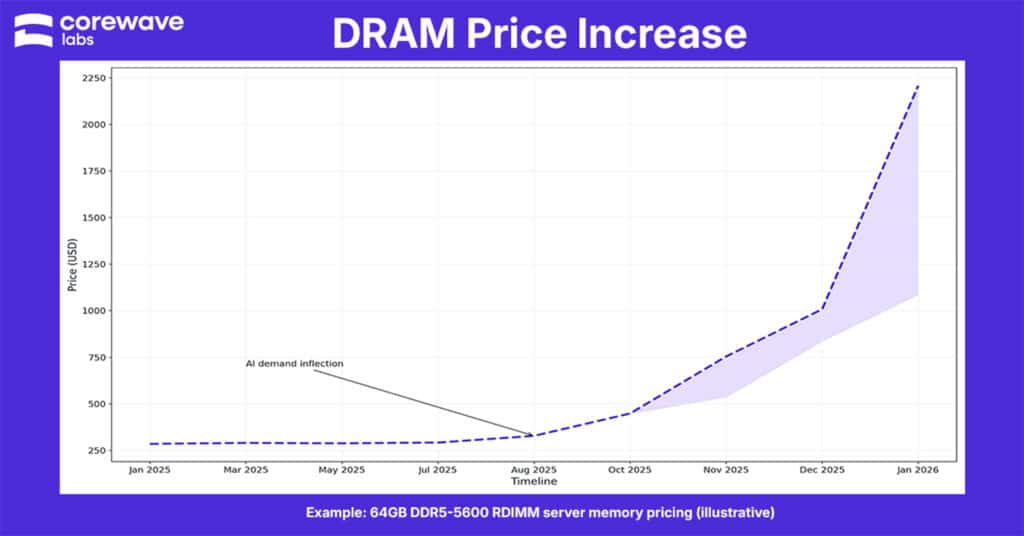

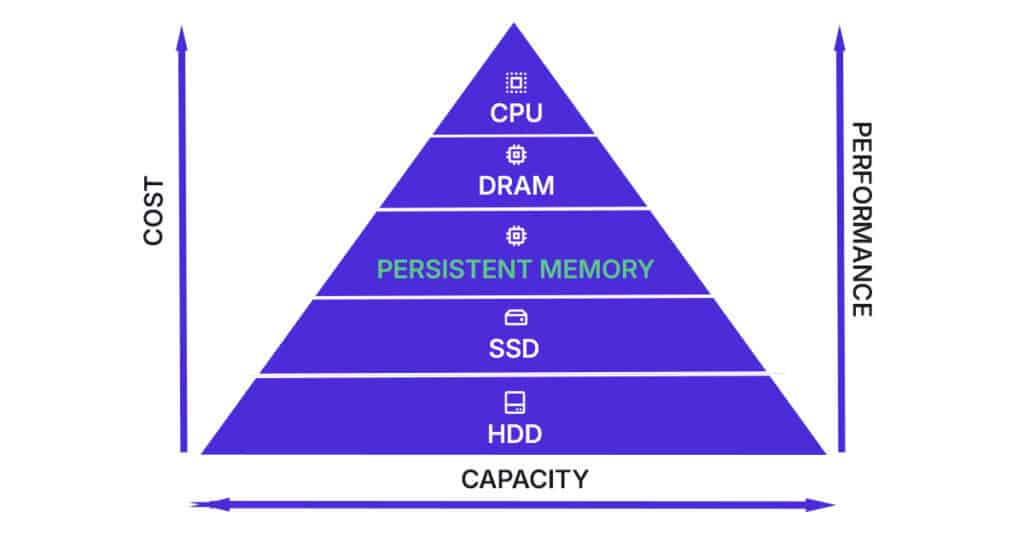

In 2025, DRAM delivers nanosecond latency—but costs a fortune per gigabyte and caps out quickly. SSDs offer terabytes of capacity—but with 100× slower access times. Somewhere between those extremes is a performance canyon that traditional hardware architectures haven’t bridged effectively.

That’s where persistent memory (PMEM) steps back into the spotlight.

With Intel Optane officially sunset, engineers are left asking:

What fills the gap between DRAM and NAND now?

The answer lies in two next-gen directions:

- NVDIMM-P: Persistent memory on the DDR5 memory bus

- CXL Type-3: Fabric-attached memory expansion over PCIe

Whether you’re optimizing SAP HANA restart times, compressing latency in VM caching, or prepping for composable memory fabrics, understanding PMEM’s role in your stack is no longer optional.

1. What Is Persistent Memory?

Persistent memory (PMEM) is a hybrid storage class that combines the speed of DRAM with the non-volatility of NAND. It behaves like memory—but keeps data even when the power is cut.

Unlike SSDs, PMEM is byte-addressable and connects directly to the memory controller—either via DIMM slots (like NVDIMM-P) or PCIe lanes (via CXL).

Key characteristics:

- Non-volatile (retains data across reboots)

- Lower latency than NAND (~100–500 ns vs 80,000+ ns)

- Higher capacity per socket than DRAM

- Byte-addressable, unlike block-based SSDs

Common Types of Persistent Memory in 2025

| Type | Interface | Example Products | Status |

|---|---|---|---|

| Intel Optane DCPMM | DDR4 | P4800X, P5800X | Discontinued |

| NVDIMM-P | DDR5 | Micron, Samsung prototypes | Sampling 2025 |

| CXL Type-3 Devices | PCIe 5.0/6.0 | Astera Labs, Samsung modules | Early deployment |

Where It Fits in the Memory Hierarchy

Here’s a simplified memory-storage latency ladder for 2025:

DRAM (80 ns)

↓

NVDIMM-P (~120 ns)

↓

CXL Type-3 Memory (~350 ns)

↓

NVMe SSD (~80,000 ns)

Persistent memory slots into the “warm tier”—bridging the gap between fast but volatile DRAM and slow but persistent NAND.

2. Persistent Memory vs RAM – Key Differences

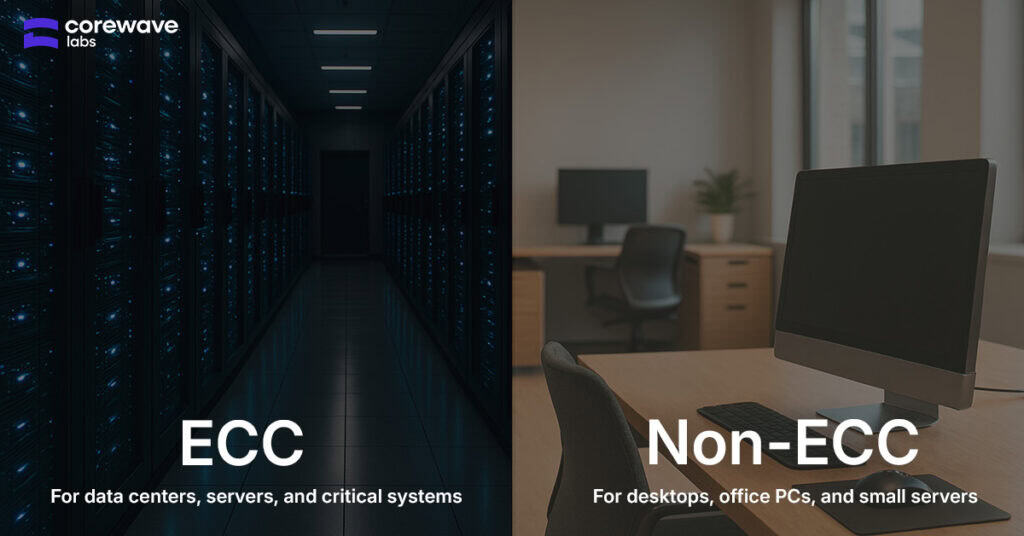

While DRAM is the de facto standard for volatile system memory, persistent memory introduces a new tier with distinct tradeoffs. Here’s how they compare across critical technical and economic dimensions:

| Metric | DRAM (DDR5 RDIMM) | Persistent Memory (PMEM) |

|---|---|---|

| Speed/Latency | 80–100 ns | 120–500 ns |

| Volatility | Volatile | Non-volatile |

| Endurance | Very High | Medium (20–60 DWPD) |

| Latency | Lowest | Moderate |

| Capacity per Module | 32–128 GB | 128–512 GB |

| Cost per GB | ~€8/GB | ~€3/GB |

Speed / Latency

- DRAM: ~80–100 ns (reads/writes directly on memory bus)

- PMEM: ~120–500 ns depending on form factor (NVDIMM-P vs CXL)

PMEM is slower, but still orders of magnitude faster than SSDs. For workloads that tolerate minor latency penalties in exchange for capacity or persistence, it’s a strong fit.

Volatility

- DRAM: Fully volatile — loses all data on power loss

- PMEM: Non-volatile — retains data across reboots and crashes

This enables fast restart use-cases (e.g., SAP HANA), and write-heavy systems that benefit from journaling or tiered memory management.

Endurance

- DRAM: Practically infinite (10⁸–10⁹ cycles, wear is negligible)

- PMEM: Limited endurance (~60 DWPD for Optane-class; 20–40 DWPD expected for NVDIMM-P / CXL Type-3)

PMEM endurance sits between DRAM and SSDs, but high enough for most enterprise read/write cycles, especially when used as a cache or journaling layer.

Capacity per Module

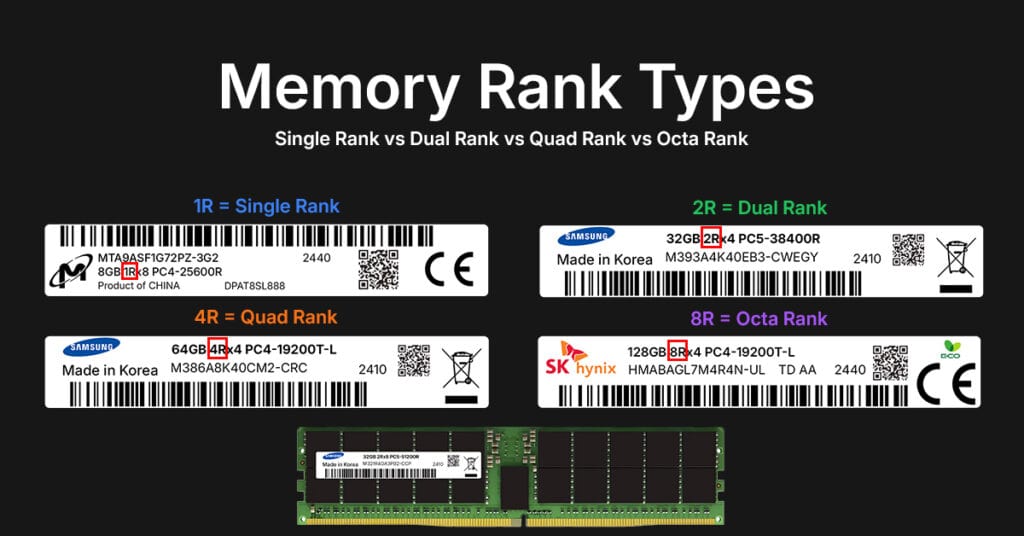

- DRAM: Typically 32–64 GB per RDIMM; high-density 128 GB RDIMMs at a premium

- PMEM: 128 GB–512 GB per module (2025), higher with CXL pooling

This enables 2×–4× memory expansion per socket, especially valuable in data-intensive and virtualized environments.

Cost per GB

- DRAM: ~€7–9/GB in Q2 2025

- PMEM: ~€2.5–4/GB (Optane-class); NVDIMM-P & CXL expected to drop below €3/GB at volume

Lower $/GB makes PMEM ideal for memory-bound workloads where cost-efficiency matters more than peak bandwidth.

3. Optane Is Gone – Now What?

The End of Intel Optane

Intel officially pulled the plug on its Optane product line in mid-2022, with the final production runs of P5800X SSDs and DCPMM (3D XPoint) memory modules wrapping up between 2023 and early 2024.

| Event | Milestone |

|---|---|

| July 2022 | Intel exits Optane business |

| Q1 2023 | Final Optane orders accepted |

| Q3 2023 | DCPMM EOL confirmed for all SKUs |

| Q1 2024 | P5800X SSD supply enters depletion |

| 2025 | Secondary market remains, but support limited |

2025 Supply & Compatibility Challenges

If you’re still deploying Optane, expect the following risks:

- Sourcing issues: Only refurbished or old-stock units available

- Firmware gaps: BIOS/microcode support fading for new platforms

- Spare part availability: No new stock from Intel or official resellers

- Platform lock-in: Only works on specific Xeon CPUs (Cascade Lake, Cooper Lake, Ice Lake)

In short: Optane is not a viable long-term solution for new deployments.

Short-Term Workarounds (2025 Stopgaps)

- Leverage existing stock in “cold standby” failover nodes (non-critical roles)

- Use PMEM-aware file systems (like ext4-dax, xfs-dax) on SSDs to simulate persistent tier behavior

- Deploy NVMe-over-Fabrics + RAM caching to fill gaps until CXL/NVDIMM-P is production-ready

However, real long-term answers lie in NVDIMM-P and CXL Type-3 memory modules—covered in the next section.

4. Two Next-Gen Alternatives

With Intel Optane discontinued, two serious contenders have emerged to fill the persistent memory gap:

NVDIMM-P and CXL Type-3 memory devices.

a) NVDIMM-P (DDR5-Based Persistent Memory)

What it is:

NVDIMM-P is a JEDEC-standard memory module that combines non-volatile media (like ReRAM or future 3D XPoint alternatives) with DRAM buffers and operates over the native DDR5 interface.

The JEDEC JESD304-4.01 specification defines how NVDIMM-P modules operate on the DDR4/DDR5 interface alongside traditional DRAM.

Read the standard on JEDEC’s official site.

How it connects:

- Plugs directly into standard DDR5 DIMM slots

- Operates over the CPU’s memory bus, not PCIe

- Offers both App-Direct and Memory Mode, similar to Optane DCPMM

Latency & Performance:

- ~120–150 ns latency (very close to DRAM)

- Bandwidth: comparable to DDR5-4800

- Capacity per module: 128 GB to 512 GB expected by late 2025

- Power loss protection via onboard capacitors

OS Support:

- Linux: PMDK, daxctl, ndctl fully support NVDIMM-P out of the box

- Windows Server 2025: DAX support in NTFS and ReFS

- Compatible with existing PMEM-aware apps: SAP HANA, Redis, RocksDB, VMware

Ideal Use Cases:

- In-memory databases with fast restart requirements

- Write-heavy apps needing persistence (logging, journaling, OLTP)

- Virtualized workloads where DRAM is cost-prohibitive

b) CXL Type-3 Memory Devices

What it is:

CXL (Compute Express Link) Type-3 devices enable memory expansion and pooling over PCIe, allowing memory to live outside the CPU socket, attached via a fabric.

How it connects:

- Uses PCIe 5.0 / 6.0 physical lanes

- Enables device memory to be mapped into system address space

- Protocols:

CXL.io: control plane (PCIe-compatible)CXL.mem: load/store access to device memoryCXL.cache: optional cache coherency for host–device interaction

Latency & Performance:

- ~300–500 ns round-trip latency

- Higher than NVDIMM-P but much lower than SSDs or NVMe-over-Fabrics

- Bandwidth: PCIe-dependent (x8 or x16)

Pooled Memory Advantage:

- Enables multi-host memory pooling with CXL switches

- Perfect for disaggregated architectures (e.g., DPUs, composable infrastructure)

OS Support:

- Linux 5.12+ includes CXL core driver

- Tools:

cxl-cli,memdev,region, and standard PMEM file systems - Actively being adopted in hyperscaler and HPC environments

Ideal Use Cases:

- AI inference or caching workloads that don’t need lowest latency

- Memory expansion in dense edge servers or cloud nodes

- Future-ready setups using fabric-attached memory with DPUs

For more on the CXL memory specification and its roadmap, visit the Compute Express Link Consortium.

5. NVDIMM-P vs CXL – When to Use Which

Latency Budgets

| Memory Type | Avg. Latency |

|---|---|

| DDR5 DRAM | ~80 ns |

| NVDIMM-P | ~120–150 ns |

| CXL Type-3 | ~350–500 ns |

| NVMe SSD | ~80,000 ns |

If latency matters (Redis, OLAP, OLTP), go NVDIMM-P.

If you’re optimizing for flexibility and scaling, go CXL.

Infrastructure Constraints

| Constraint | Favor |

|---|---|

| Limited DDR5 DIMM slots | CXL (uses PCIe lanes) |

| Limited PCIe lanes | NVDIMM-P (plugs into existing DIMM channels) |

| Need to scale memory across hosts | CXL with pooling switch |

| Want to stay socket-local, fast | NVDIMM-P |

Deployment & Scaling Philosophy

- NVDIMM-P is simple, socket-local, latency-focused, and excellent for DRAM extension.

- CXL Type-3 is composable, fabric-aware, and built for datacenter-scale flexibility.

Decision Matrix

| Feature / Goal | NVDIMM-P | CXL Type-3 |

|---|---|---|

| Latency-sensitive (e.g., Redis, HANA restart) | Suitable | Acceptable (higher latency) |

| Higher memory capacity per host | Yes | Yes |

| Multi-host memory sharing | No | Yes |

| Uses DDR5 DIMM slots | Yes | No |

| Uses PCIe lanes | No | Yes |

| Easier deployment today | Yes | Requires CXL-capable CPUs & BIOS |

| Long-term composable infrastructure | Limited | Strong |

5. Real-World Workloads Benefiting from PMEM

Persistent memory isn’t just theoretical anymore — it’s in production across enterprise stacks. Here’s how leading teams are using it in the field:

SAP HANA Fast Restart

With PMEM configured in App Direct mode, SAP HANA can store columnar in-memory tables persistently.

Result: Database restart time drops from ~15 minutes to under 60 seconds after OS reboot or crash

Redis with App Direct Mode

When Redis is configured for PMDK back-end storage, it gains:

- Persistent key-value state even after crashes

- Lower write amplification

- Faster cold-start loading

VMware vSphere Persistent Memory

vSphere supports PMEM in:

- “vPMEM” mode (exposes PMEM to VMs as virtual NVDIMMs)

- “vPMEMDisk” mode (PMEM-backed datastores)

Used in VDI, SAP, and low-latency enterprise workloads, it cuts VM startup times and increases density.

DPU + Memory Disaggregation via CXL

Data Processing Units (DPUs) paired with CXL switches enable remote memory pooling across hosts.

This allows:

- Composable memory expansion on-demand

- Better DRAM/PMEM utilization per rack

- Clean separation of compute and memory domains

Use case: Edge computing, CDN nodes, or multi-tenant environments with dynamic scaling.This allows:

AI Inference Latency Trimming

When inference engines (e.g. TensorRT, ONNX Runtime) are memory-bound, persistent memory provides a mid-latency, high-capacity cache to avoid SSD-bound reads.

This benefits:

- LLM inference with token caching

- Image and speech pipelines needing high batch throughput

6. Total Cost of Ownership (TCO) Modeling

Here’s how PMEM compares when factoring latency, endurance, and $/GB — especially for workloads where DRAM alone doesn’t scale:

Latency vs Cost vs Endurance Snapshot

| Memory Tier | Latency | Cost/GB | Endurance | Use Case |

|---|---|---|---|---|

| DRAM | 80 ns | ~€8 | Very High | Active compute |

| PMEM | 120–500 ns | ~€3 | Medium (20–60 DWPD) | Tiered memory, fast restart |

| SSD (NVMe) | 80–100 µs | ~€0.08 | Low | Bulk storage |

Mini TCO Logic (Example)

Total Memory Need: 2 TB

Option 1: 100% DRAM

- Cost = €16,000

- Fast but not scalable

Option 2: 1 TB DRAM + 1 TB PMEM

- Cost = €11,000

- Only ~25% latency hit for cold-tier data

- Restarts are faster; endurance is still enterprise-grade

Result: ~30% cost savings with ~90% performance retention when tiered properly.

7. The Road Ahead (2026–2028)

The next 36 months will define how persistent memory scales beyond stopgap solutions. Here’s what’s coming—and what it means for data center strategy.

MR-DIMM (Multiplexer Rank DIMM)

- Next-gen DDR5 module that stacks more ranks using a built-in multiplexer

- Allows twice the capacity per DIMM without increasing pin count

- Enables up to 2 TB per socket without touching PMEM

- First samples expected with Intel Granite Rapids (2026) and AMD Turin (Zen 5 EPYC)

HBM-PIM (Processing-In-Memory)

- Combines High Bandwidth Memory with onboard logic (PIM)

- Data is processed directly in-memory, reducing CPU load and data movement

- Ideal for AI inference, real-time analytics, and ultra-low-latency workloads

- Early demos by Samsung, SK Hynix, and UPMEM

CXL 3.0 Fabrics

- Enables memory pooling and switching across multiple hosts

- Adds multi-level switching, memory sharing, and fine-grained device partitioning

- Supports “memory as a service” in cloud-native environments

- Requires CXL-capable CPUs + memory switch silicon (e.g., Astera, Montage)

Composable Memory Demos (Liqid, Samsung)

- Samsung & Liqid have demoed rack-level memory disaggregation

- Real-world: boot a server with zero local DRAM, fetch memory over CXL

- Fully programmable memory topologies

- Early deployments in hyperscaler labs, telco edge pilots

Adoption Curve: Where Are We by 2028?

Developers need to adopt new programming models and tools to fully utilize persistent memory’s benefits. This adds a learning curve and development overhead, especially in environments with legacy systems or limited in-house expertise.

| Year | Technology Milestone | Who’s Adopting |

|---|---|---|

| 2025 | NVDIMM-P samples, CXL 2.0 boards | Labs, early edge |

| 2026 | Granite Rapids & Turin CPUs, CXL 3.0 fabrics | Tier-1 DCs, telco nodes |

| 2027 | MR-DIMM volume, HBM-PIM inference cards | AI, HPC |

| 2028 | Rack-scale memory disaggregation | Cloud-native and hyperscalers |

FAQ: Persistent Memory

What is the main difference between persistent memory and RAM?

Persistent memory (PMEM) retains data after power loss and offers higher capacity at a lower cost per GB, while RAM (DRAM) is faster but volatile. PMEM sits between RAM and storage in the memory hierarchy.

Can I still use Intel Optane in 2025?

You can use existing Optane modules (DCPMM, P5800X) if your platform supports them, but Intel no longer manufactures or supports new Optane products. Expect sourcing issues and limited firmware compatibility.

What replaces Optane memory?

The two leading successors are:

NVDIMM-P: Socket-local persistent memory on DDR5

CXL Type-3: PCIe-based fabric-attached memory

Both provide non-volatile capacity with sub-microsecond latency.

What’s the latency of CXL memory?

CXL Type-3 memory devices typically have ~350–500 ns latency, depending on PCIe generation and topology. This is 5–6× slower than DRAM, but ~200× faster than NVMe SSDs.

Can you mix DRAM and PMEM?

Yes. Servers can be configured with both DRAM and PMEM modules, using:

Memory Mode (PMEM appears as RAM, DRAM is cache)

App Direct Mode (PMEM is a separate addressable tier)

Supported on platforms like Intel Ice Lake, Granite Rapids, and AMD Turin with proper BIOS and OS drivers.

Conclusion: Persistent Memory Is No Longer Optional

In 2025 and beyond, persistent memory is a strategic tool, not just a niche component.

Whether you’re upgrading servers, designing edge infrastructure, or scaling AI inference—DRAM alone won’t cut it.

NVDIMM-P offers the lowest-latency upgrade path.

CXL memory unlocks the future of pooled, fabric-based infrastructure.

Both are here, both are real, and both will reshape memory architectures over the next 36 months.

About the Author

Edgars Zukovskis

Board Member | CoreWave Labs

14+ years of expertise helping telecom operators, datacenters, and system integrators build efficient, cost-effective networks using compatible hardware solutions.

Server Memory for Top Brands

Select Your Server Brand to Find Compatible Memory

Recommended Reads

Discover insights to power your infrastructure.